Are We Talking to AI the Right Way?

Talking to AI is becoming a core skill in the modern world. Whether you’re an engineer, a marketer, or a product designer, knowing how to effectively interact with large language models (LLMs) like ChatGPT, Claude, or Gemini can help you do your job faster and better. But here’s the catch: it’s not just about what you say, it’s about what the AI understands around what you say. This is where prompt engineering and context engineering come in. While both are crucial for unlocking the full potential of AI, they serve different purposes, and the line between them is thinner than many realize.

What Is Prompt Engineering, Really?

Prompt engineering is the craft of writing precise, effective, and goal-oriented inputs to LLMs. Think of it like asking the perfect question or giving a very specific instruction. Whether it’s telling the model to act as a legal advisor or to generate a summary in bullet points, prompt engineering is about structuring your query in a way that the model responds accurately and usefully.

It relies on techniques like:

- Using clear and concise language

- Adding structured formats like “Act as…” or “Explain step by step”

- Providing few-shot examples to guide the model

- Specifying tone, length, or target audience

Prompt engineering is intuitive, quick to learn, and widely used by content creators, marketers, researchers, and anyone trying to get high-quality output from AI.

So then, what is Context Engineering?

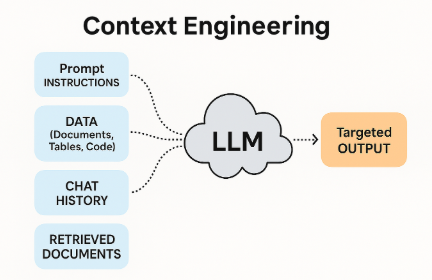

Context engineering, on the other hand, goes a step deeper. It’s about shaping the full environment in which the AI operates. Context engineering determines not just the prompt, but everything the model already knows when it’s prompted. This includes:

- Prior chat history or ongoing conversation memory

- System-level behavior instructions (e.g., “You are a formal assistant who avoids speculation.”)

- Relevant documents, articles, or data injected during the exchange

- Persistent user preferences, goals, or metadata

- API and tool integrations within agents

Context engineering is what turns a chatbot into a digital assistant or an AI tool into a smart agent.

Where Do These Two Overlap?

While prompt and context engineering may seem distinct, in practice they are tightly intertwined. A well-written prompt often fails without the right context.

For example:

Prompt: “What should I pack for my trip?”

Without context: AI gives generic travel tips.

With context: The model knows you’re going to Iceland in December for hiking, so it suggests thermal gear, crampons, and layered clothing.

Modern AI systems operate over multiple layers of context:

- The user’s current prompt

- The full conversation history

- System behavior instructions

- Retrieved documents or embedded knowledge

The richer the context, the more relevant and helpful the model’s response—and the more central context engineering becomes to the overall outcome.

Why Is Context Engineering Growing in Importance?

Initially, prompt engineering rose to fame with the launch of tools like ChatGPT, where tweaking and fine-tuning inputs led to better results. But as use cases grew more complex, it became clear that prompting alone wasn’t enough. Systems needed memory, relevance, personalization, and the ability to pull in knowledge dynamically.

This is where context engineering gained prominence. Context engineering is the backbone of robust, intelligent applications, such as:

- Customer support bots that remember past complaints

- AI tutors that adapt to a learner’s strengths and weaknesses

- Enterprise copilots that fetch data from private sources in real time

These advanced behaviors rely more on engineered context than clever prompts.

Which One Should You Focus On?

For non-technical professionals, prompt engineering remains the most approachable skill. It’s fast, impactful, and often enough for quick wins. However, understanding even the basics of context, like how memory affects conversation or how the system prompts guide tone, can significantly improve results.

For technical professionals, both skills matter, but context engineering becomes crucial. Whether you’re building AI products, integrating retrieval pipelines, or designing tool-augmented agents, context is what makes the system smart and scalable.

Entrepreneurs and builders, especially, benefit from combining both:

- Use prompt engineering to refine tone and clarity

- Apply context engineering to personalize, scale, and automate workflows

Will Prompting Be Automated? What Happens Then?

Looking ahead, prompt engineering may become increasingly automated. AI tools are already learning how to reframe, rewrite, or optimize prompts behind the scenes. In many cases, users won’t even realize prompting is happening.

Context engineering, however, will remain a vital human-driven skill. Designing workflows, managing memory, curating data sources, and orchestrating intelligent behavior will be essential for AI systems that aim to deliver more than just good responses; they’ll be expected to deliver results.

The Final Word: Talking vs. Listening

In the end, prompt engineering helps you talk to AI. Context engineering teaches AI how to listen.

If your goal is quick experimentation or lightweight interaction, prompting might be all you need. But if you’re aiming to build lasting, intelligent, and personalized systems, then context engineering is the true differentiator. Because in this new era of AI, it’s not just what you say that matters. It’s everything the AI already knows when you say it. You can check this detailed prompt engineering guide by Google for more information.

Learn more about our AI Services & Solutions, Feel free to drop us a line at connect@dataslush.com and we’ll take it from there.