We at DataSlush are working on multiple proof-of-concept projects on large language models. It was interesting to learn about the application of Large Language Models in various business domains. In this blog post, I am going to share some of the learnings that we had while utilizing Large Language Models for different problems,

1. Be reasonable on what to expect from Large Language Models

LLMs are not 100 % deterministic, so you need to be very much careful while using them. It is good at summarizing the knowledge it has, but would not work on intelligent calculations. So ensure that you have the right use case where you expect Large Language Models to generate some content based on instructions that don’t involve too much of math calculations. We faced this issue while working on POC of Math Tutor.

2. Larger problem should be divided into smaller problems:

What we have seen so far is that if we ask Large Language Models to generate output for a large problem comprising multiple small problems, It starts hallucinating more. So the solution to it that we believe has got us good results is to divide large problems into smaller ones. If you create multiple prompts and combine the output of it to solve a larger problem, it will work well than a single prompt.

3. Think for corner/edge cases first even before solving the main problem

In many applications, we have observed that we have ended up working more on edge/corner cases than the main problem. You will require to think wisely about all the edge cases first as they will become headache to handle at a later stage of development. We recommend to thoroughly defining the list of edge cases

4. Apply processing layer for both input and output

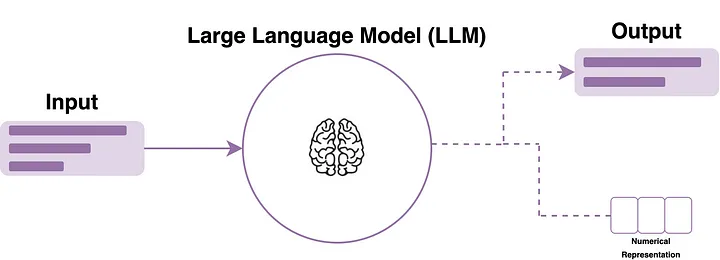

If there is no processing layer implemented for both input and output. Large Language Models will start creating unwanted output. In a few applications we have seen that users input is required to be parsed in a specific way before sending to LLM. The same is also applicable to the output generated by Large Language Models.

5. Give detailed instructions in the prompt

We have seen that providing detailed instructions on a specific task has helped to generate better results from Large Language Models. We have religiously followed the GPT best practices by OpenAI and it has helped us immensely in obtaining structured output

6. Utilise tools like Langchain

Langchain has played a very much vital role in our proof-of-concept projects. It has helped us to make our iterations faster in development. We highly recommend using langchain for Large Language Models applications development.

At DataSlush, our approach to validating Large Language Model projects is grounded in practical business impact and technical rigor. We start by working closely with stakeholders to define clear objectives and select use cases that promise measurable value, such as automating document review or enhancing customer interactions. Our team combines deep domain expertise with advanced AI capabilities to design modular solutions that are both scalable and cost-effective.

Each project is tested with real-world data and evaluated against key metrics like accuracy, response time, and user satisfaction. We address risks early by monitoring for issues such as bias or hallucinations, and we incorporate human feedback to refine outcomes. This structured, transparent process ensures every initiative is positioned for seamless transition to production.

We at DataSlush have expertise on all the Large Language Models, being a research and innovation driven organization we establish proof of concepts and create a minimum viable solution before we deploy it in production. Feel free to reach out to us for our Artificial Intelligence services. Being a Google Cloud partner, we continuously strive for innovation and excellence in Data & AI.